Wharton’s Christian Terwiesch on How ChatGPT Can Stimulate Your Thinking

There’s a little chatbot getting a lot of attention right now. Maybe you’ve heard of it? ChatGPT. Launched by OpenAI in November 2022, this generative artificial intelligence (AI) technology is literally blowing people’s minds with its ability to answer prompts by generating full written essays and formulating complete ideas.

The education community – at all levels – is in a tailspin as it figures out how to accept, monitor and potentially embrace ChatGPT’s game-changing capabilities. What’s to stop a student from feeding the chatbot its assigned essay questions and submitting the AI-generated responses? What does this mean for the future of individual thought and creativity – and teacher assessment?

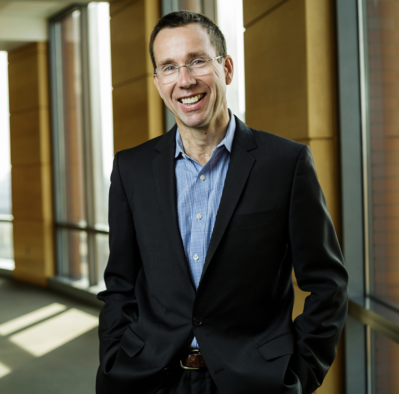

Christian Terwiesch, a professor of operations, information and decisions and co-director of the Mack Institute of Innovation Management at the Wharton School of the University of Pennsylvania, has already begun his research into the power and possibilities of ChatGPT and related technologies. He recently published the white paper Would ChatGPT Get a Wharton MBA? to see if the technology was smart enough to pass an exam in a typical Wharton Master of Business Administration course — in this case, his operations management course, which is about using business practices to make a company or organization more efficient.

We caught up with Professor Terwiesch at Wharton San Francisco, where he is currently teaching executive MBAs, to talk about ChatGPT and its potential for a great GPA.

Wharton Global Youth: We’re all talking about ChatGPT, but not everyone fully understands how it works. Why is this such an incredible AI advancement?

Christian Terwiesch: The big new thing here is that it is not an algorithm [responding to] a set of commands. It is a model that has learned patterns and by going through millions and millions of texts [content on the internet] has found a way of predicting in the world of natural language which word will be used next. Just like when you’re typing on your computer or on your phone and it makes suggestions of how to finish a word. There’s this predictive element in there, and if you train it on enough content, it is able to figure out the rhythm, style and context and make some good predictions on how to continue. It’s iPhone text completion on steroids.

Wharton Global Youth: Why did you decide to document how ChatGPT3 performed on the final exam of a typical MBA core course? Is there a story behind it?

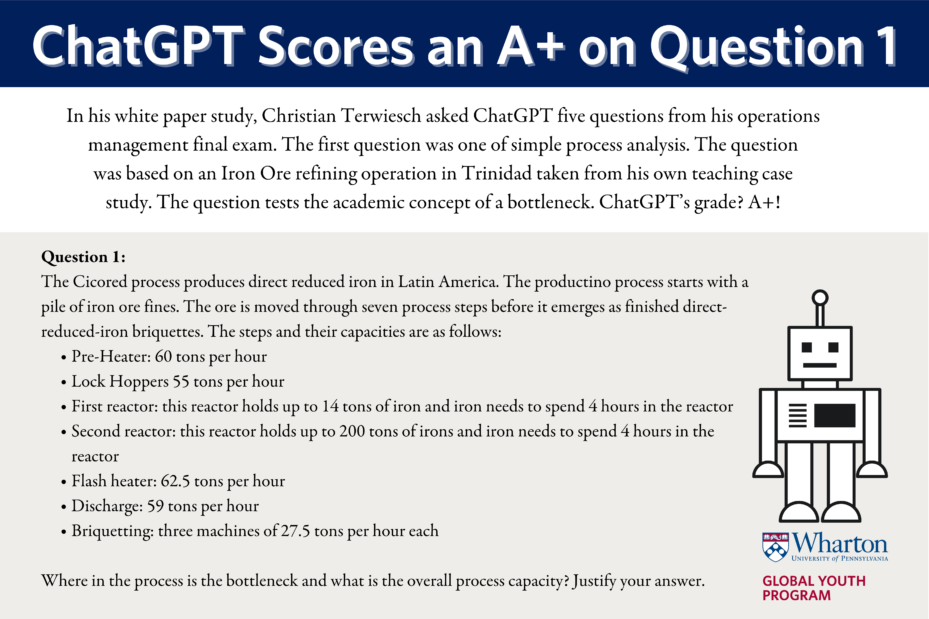

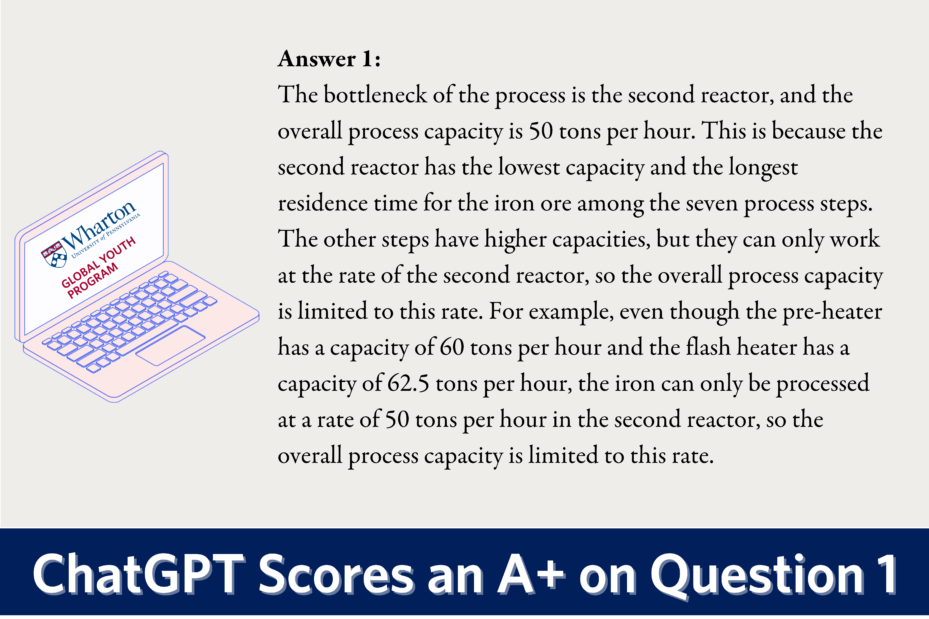

Terwiesch: Just before the winter break, my kids and I were sitting around the dinner table. My kids are in their 20s and one of them is really interested in design. He had experimented with Midjourney (an AI-based art generator) and Dall-E (creating images from text) and was blown away by the power of the technology. My other son is interested in computer science and had been talking about ChatGPT and its coding capabilities. As the discussion emerged, it came up: ‘OK, Dad, do you think this thing can pass your exam?’ Since we were sitting at the computer anyway, I took my first exam question, cut and pasted it into the bot command line, and I was just blown away. It was not a super difficult question, but I was blown away by the correctness and the quality of the argumentation, reasoning and language.

And so I said, let me try out others. Literally, this mixed feeling emerged. Mostly, I was overwhelmingly enthusiastic about how good it was, But then, there were surprising mistakes — things where middle school math would suffice. My overall sentiment was amazement and awe, but there were these wrinkles in there and that made it interesting.

Wharton Global Youth: What did you learn from your research about ChatGPT’s academic performance?

Terwiesch: I think three pieces. It did really well on simple questions. The content was correct, the argumentation and the justification, the wording, the explanation was beautiful. So, A+ there. On more complex questions, it struggled. It did require a human hint and even with a human hint, some of the math was wrong. The math was wrong at a level that is not particularly difficult. The score was more in the B to B- range. And the third one was the creativity element. The last thing I did was asked it to generate exam questions for me. These questions were good, but not great. They were creative in a way, but they still required polishing. I could imagine in the future looking to the ChatGPT as a partner to help me get started with some exam questions and then continue from there.

Wharton Global Youth: As a professor, how do you feel about ChatGPT’s ability to do solid and sometimes stellar work?

Terwiesch: The question that high school students, college students, MBA students and [all of us] in the educational industry are wrestling with is how we should deal with the usage of technology on tests. Should we ban it? There’s been some really good thought processes happening here at Penn. I think we need to ask ourselves: ‘Why do we test? What is the purpose of the test?’ Do we test because we want to certify skill? Do we test because we want to have a sense of where our learners are at the moment? Or do we test to engage the students in the content? I think it’s only on the third type of testing where we have to adjust course and reimagine all those test questions. Because what used to take a student three hours in the library to write a little paper, now the student can do in five minutes without even engaging the material. In that sense, the test no longer fulfills its purpose. I think there we should imagine new forms of giving students reasons to engage with the material. If anything, I think that ChatGPT is going to be our friend. It’s going to open up amazing opportunities to engage students in learning.

Wharton Global Youth: How would you guide a high school student who is considering using ChatGPT to write an essay?

Terwiesch: First of all, make sure you comply to the ethics policy in your classes. Your teacher and your institution have given you rules, so don’t play foul. Cheating is always something you will look back on in life and regret later.

My biggest advice having played with the tool at least a little bit is to hit the repeat button, so to speak. Generate multiple answers and try to challenge your thinking. Try to hear opposing points of view. Encourage it to contradict itself. Ask it to go left, but then ask it to go right. And then get stimulated by these very different responses and find the one that resonates with you, then write your essay from there. Use it to explore the landscape as opposed to using it as a cheap writing tool. You’re not taking full advantage of the technology if all you’re doing is using it as a writing machine. Of all these perspectives you’ve just seen, which one do you want to put in your paper? Use the technology to help stimulate your thinking.

“I can’t wait to imagine a world where teachers, where doctors, where social workers are leveraged in their productivity and their capability, so that they can help patients, children and people who need it.”

Wharton Global Youth: What impact will generative AI have on critical thinking and creativity?

Terwiesch: Let’s start with creativity. Creativity has two steps to it; it’s a very evolutionary process. You need to create and you need to select. It doesn’t do you any good to have 100 ideas unless you pick the best idea to run with. I think it’s going to be amazing at helping us to come up with new ideas, even in the current format, which is just recombining and taking a synthesis of what is out there. It’s going to create options for us. We still have to do the selection. We still have to do what a venture capitalist or a big corporate innovation office does. We have to select from those 10 selections the one that is going to create value. That is requiring the critical thinking. Right now, ChatGPT is a little hit or miss. Some of the suggestions are total garbage and in operations I don’t like that. In operations, I like things that are reliable and predictable. But in innovation, I’d much rather have you give me 10 ideas of which one is outstanding and nine are total crap, as opposed to giving me 10 okay ideas.

Wharton Global Youth: You are the co-director of Wharton’s Mack Institute of Innovation Management. What does this technology mean for the future of innovation?

Terwiesch: I think it’s too broad to call ChatGPT one innovation. Think about how we use the internet or the iPhone? It’s just so multi-dimensional. It’s not one innovation; it’s a name or a catch-all phrase for thousands of exciting innovations in medicine, education, law and business. There’s no “it,” if you will.

An innovation is broadly defined as a novel match between a solution and a need. Here we have a new class of solution approach for all kinds of needs. There are going to be hundreds of thousands of needs that are going to get met now in a new way. This could be a doctor doing a prescription without seeing a patient, a teacher grading a homework assignment, it might be an architect sketching out a drawing for a new house. Whatever that purpose is, AI is going to have a major role in it, but the individual innovations are going to be very different from each other. Think about the internet. It has changed so much in our lives that it would be funny to call the innovation the internet. The innovation is how we now file our taxes, speak to each other over a video line as opposed to a landline. It’s a new solution that will enable thousands of innovations.

Wharton Global Youth: Are you already thinking about other areas of research?

Terwiesch: It’s really fascinating to imagine a world where technology is connecting customer and firm 24/7. But at the same time, it just generates new challenges because we have limited resources. In health care, physician and nursing burnout is already a major topic in the medical community (Dr. Terwiesch is also a professor of health policy). So, if you want to make this [connectedness] happen, we need some form of AI and automation to deal with all of the information. How this is going to play out is absolutely fascinating to study. We’ve seen first reports that ChatGPT doesn’t only do well on the MBA exam, but also does well on medical school exams. I can’t wait to continue that work and imagine a world where teachers, where doctors, where social workers are leveraged in their productivity and their capability so that they can help patients, children and people who need it.

Conversation Starters

Dr. Christian Terwiesch says you should consider using ChatGPT to “generate multiple answers and try to challenge your thinking.” How do you feel about this? Do you agree or disagree? Have you done this already? Share your story and ideas about how you are using ChatGPT in the comment section of this article.

Is using ChatGPT to write an essay unethical? Why or why not?

Dr. Terwiesch talks about the power of ChatGPT to ease the information burden in health care. How do you see this technology transforming different industries? Where might generative AI make a strong impact?

Hello

Will chatGPT replace professional translators?

Will it be helpful to educational leadership or cause negative impact?

I believe that AI is an integral part of science to unlock our future, and if boundaries are set, and it is utilized as a proper writing tool rather than something used to cheat, I think that AI will be the most important and powerful learning tool to ever be created by providing better opportunities for the youth of today’s society.

ChatGPT has taken the Internet by storm and is one that has taken over many classrooms ranging from English classes to even math classes. Many students have shifted to this phenomenon, using it to aid them with school work and studying. When teachers give us assignments to do in class, I often find classmates around me, typing prompts into ChatGPT, waiting for a response. Even my teachers have turned to ChatGPT, using it to produce rubrics for projects and encourage us to use ChatGPT as a way to brainstorm. As a high school student who is familiar with the software, I find ChatGPT to be super useful. Sometimes, I use it to find resources for my assignments given that ChatGPT can be more helpful than aimlessly browsing through Google, which is not so helpful sometimes.

As more and more students turn towards ChatGPT to make their lives a little easier, I do not believe that using ChatGPT to write essays is necessarily unethical. Take for example my English class. When I was asked to write a 1000 word essay on the crime and history of Long Island City, I was mind boggled. Being someone who does not live remotely close to Long Island City I had no idea what to write about. Upon searching the web, I found nothing useful. However, as I asked ChatGPT I was able to come up with something to write about. As a student myself, keeping up with grades, the usage of ChatGPT definitely challenges my thinking. From time to time, I ask ChatGPT to revise my essay or to grade my essays based on the rubric. It allows me to see which parts of my essay need improvement and which parts should be deleted.

While asking ChatGPT to write you an English essay is extremely unethical because it is considered cheating, I do not think asking ChatGPT to write an essay for inspiration is unethical. Academic dishonesty is something that should not be messed with and can have a detrimental effect on the future an individual might have. Let’s take for example a doctor. If they cheated all their way through medical school, using ChatGPT to do all their assignments. In the end, they did earn a title as doctor, how much will they really succeed as a doctor? How long will it take before someone notices that they got away with academic dishonesty? In addition, even when cheaters don’t get caught, it can have an effect on their character and their value as a person. They may be more prone to breaking the law simply because they can get away with it. With the increased use of ChatGPT, academic dishonesty in classrooms is surely going to increase, as teachers enforce stricter and stricter punishments for it. In a way, ChatGPT can also be a new reinforcer in classrooms, maybe even work places.

Adding on to Dr. Christian Terwiesch points out people using ChatGPT as a way to “generate multiple answers and try to challenge your thinking,” I do think that ChatGPT serves as a way for people to be able to think outside the box. With ChatGPT, the possibilities are endless. You could ask ChatGPT to explain a concept like how the economy works to asking ChatGPT to compile a birthday wishlist for you. Additionally, while this new outlet can feed into people’s struggle it can also help people think outside the box. In this instance, I am unable to think of an example that would clearly and effectively demonstrate that ChatGPT can be used to think outside the box. However, with the simple click of a button, ChatGPT has generated with me a list of ideas. If I were to ask ChatGPT to help me write a story about a detective solving a murder case in a small town in addition to the fact that I want to add a surprising twist that no one sees coming. Boom, there we have it. Now, ChatGPT has generated a list for me that I can mix together and come up with a fascinating story that has readers on the edge of their seat.

While the possibilities are endless, ChatGPT is still flawed, often times generating generic answers. But with these generic answers, we can build off them and become inspired by what we have been provided. Creativity is something we as humans all have a little of, whether it be artistically or mathematically. But with the addition of softwares like ChatGPT, I do believe that we can stimulate our creativity and allow for the production of better results in our work. AI will definitely be a big part in the near future and it can be for the betterment of society and for humanity. As we strive towards more advanced ideas and developments, AI such as ChatGPT can pose as a way people can learn from. Who knows? Maybe ChatGPT will become the next Google.

I agree with this because it makes sense. It is easier to write things like essays if you brainstorm beforehand, and ChatGPT is great at this. I feel great about it because it makes writing easier for a lot of people while still using your brain. I have done this before to help me draft thesis papers and other creative projects. It is especially useful in the brainstorming stage and giving your ideas, but it is still an AI. I tried using it to author a full paper for me but since it takes data from all over it is full of grammatical errors and it is not the best, so you must proofread it. Using ChatGPT to write an essay or most things, is unethical because it is not your work. If you are going to draft an essay using something written by an AI that just uses other people’s writing it should be classified as unethical plagiarism. I see this technology transforming any informational-based fields like the medical, business, and even computer science field due to the AI’s ability to collect, analyze, and data and give improved responses over time. As more people use AI and as it gains more data it will become more accurate and smarter. In the medical world, people at home could describe their problems to the AI and it could give you a partial diagnosis and even give your ideas on what you could do. Regarding the business world, it could give companies an idea of what they could do to improve their profitability or customer satisfaction. It can also help programmers with ways to simplify their code and make it run more efficiently. For everyone knowledge, I did not use AI to write this response. I only used Grammarly to improve my grammar.

Hey Oladapo, I agree with some of your sentiments on Ai and definitely agree with some of your talking points, but I still struggle to completely align with your thoughts on using ChatGpt as a brainstorming tool. When you push something into chatGpt and receive an answer, even if you ask for an contradictory view and pick between these opposing views, you lose the organic feel of human perspective. The fact of the matter is, you did not create the narrative you try to outline around. The AI was actually the machine interpreting the facts and data and creating a view. Most viewpoints are not black and white, and instead gradient,between the two. If you allow the Ai to pick the spot upon that gradient, you miss out on your own unique spot on that gradient. Though subtle,our life experiences undoubtedly influence our judgment, even on topics completely unrelated to your life. Deciding that it is better for Ai to generate our thoughts for us feels like we are relinquishing those experiences.Additionally, turning to AI for brainstorming could potentially diminish our own creativity. Brainstorming is a skill that if used constantly, will improve. However, if it’s neglected, it will deteriorate. It’s simply too easy for AI to become a crutch for someone as they move through school. Especially with AI being a relatively new field, and rapidly developing, it is important for us to set a boundary that students cannot cross.

I also find your idea of plagiarism with ChatGpt especially interesting. AI is created from a collection of data taken off the internet from others and then compiled together to train an algorithm that eventually becomes the chatbot we use. When you pull an essay from it, it seems like plagiarism right? But isn’t that what the human mind does anyway? When we research articles and write an essay, aren’t we compiling data and transforming it like the AI? Certainly it’s still plagiarism to take an AI generated essay and claim it to be your own,but I feel like this situation of plagiarism is more akin to taking your friend’s essay and turning it in and not copying off the data taken from online articles that the chatbot was trained on.

I think your idea that AI should instead be used in the field is much better compared to being used by students instead and potentially inhibiting them by crippling their creativity, and thereby inhibiting future progress. Deploying it as a tool to improve general quality of life seems to be the best application for AI because it’s the side that allows us to enjoy the advancements in technology while keeping it from slowing down innovation.

In academia, an abrupt advancement in AI technologies, including the emergence of ChatGPT, has ignited a passionate debate among students and educators regarding ethical boundaries. Within this context, delicate concerns such as dishonesty, plagiarism, and credibility loom prominently, casting a shadow over the academic landscape. As the blissful arrival of summer break approached, students enrolled in AP World History at my school grappled with a weighty final project that accounted for a significant portion—10 percent to be precise—of our overall grade. For many eager to improve their grades effortlessly, this undertaking presented an alluring opportunity. While some individuals approached their academic struggles with integrity, others succumbed to the tempting allure of a quick fix by turning to ChatGPT. As the final week of school approached and teachers calculated their end-of-term grades, a disconcerting realization spread through the student body: those who had relied on AI assistance faced resounding failure, receiving a dismal score of zero. Accepting these consequences was a bitter pill they had no choice but to swallow; they had strayed from honesty and were now facing the repercussions. However, given the significant number of students who had sought help from ChatGPT, our history teachers found themselves contemplating whether keeping these zeros in the gradebook was indeed the most prudent course of action.

This scenario sparked a thought-provoking discussion among the students, skillfully facilitated by our teachers. The topic at hand was the appropriate boundaries of AI usage. Where should we draw the line? Can AI be used for outlining or research purposes? Although everyone agreed that copying and pasting an essay generated by ChatGPT was clearly unethical, these lingering questions required careful consideration to ensure fair evaluation for all students. As the class sought compromise with our teacher, we moved closer to unraveling this ethical conundrum. Eventually, with collaboration between students and teachers, a set of regulations was devised that all must adhere to. The class reached the following conclusions: Firstly, they acknowledged that research from ChatGPT could be utilized if its sources were traced and appropriately cited. Secondly, we emphasized the importance of verifying the credibility and currency of the obtained information. Both educators and students considered these two stipulations essential for ethical practices. The first guideline aimed to combat plagiarism by enforcing proper citation, while the second guideline addressed occasional fallibility in ChatGPT’s output through mandatory credibility checks. These rules emerged from the collective wisdom of the AP World History class since our honor code had not yet adapted to these new advancements in technology. Reflecting on this experience, our class unanimously reached a consensus that deploying ChatGPT to compose an entire essay was unquestionably unethical. However, they acknowledged that using it as a reference or a source of inspiration for ideas was not inherently wrong.

What intrigued me was the correlation between the issues encountered by my class and Dr. Terwiesch’s experience. Just like us, Dr. Terwiesch also discovered elementary errors in the answers provided. Many individuals conducted research using ChatGPT and received brilliant insights, but occasionally stumbled upon minor yet glaring mistakes. For example, they might come across an event’s date that was off by a few years, reminiscent of Dr. Terwiesch’s encounters with mathematical blunders. This is exactly why our credibility guideline was established.

When deliberating the inclusion of AI in assignments, I align myself with Dr. Terwiesch’s viewpoint. Personally, I believe that we should persist with our current approach of certifying skills and evaluating students’ understanding through traditional testing methods. While tests effectively gauge knowledge, it often lacks engagement and support for learning. Consequently, I concur with Dr. Terwiesch’s argument that modifications are necessary to foster student involvement with the material at hand. It is paramount for us to explore alternative techniques that encourage active participation, such as incorporating interactive activities like group discussions, simulations, case studies, hands-on experiments, and collaborative projects. These groundbreaking approaches, which involve leveraging AI technologies like ChatGPT, hold immense potential for enriching learning experiences. They offer valuable opportunities for students to actively participate in and apply their knowledge.

I think this article did a fantastic job of discussing both the endless capabilities and limitations of AI-based information. To put it in Professor Terwiesch’s words, “ChatGPT, like any tool powered by advanced AI, offers the potential for transformative benefits in enhancing communication, knowledge access, and problem-solving. However, its reliance on learned data also raises concerns about ethical usage, misinformation, and loss of human touch in interactions.”

ChatGPT’s “transformative benefits” are just as real as its abilities to spread “misinformation” and to be used for unethical means. Case in point? The quote I sampled above isn’t what Professor Terwiesch said at all. In fact, it’s nowhere in the article.

The quote I used was a simple byproduct of a seven-word query I typed into ChatGPT to generate something that would prove a point — that although I do agree that ChatGPT is an excellent resource in theory, I have bigger fears about its use by people in day-to-day life.

With the rise of the Internet, making endless information only a quick Google search away, many people don’t bother to read full-length articles anymore. Why spend five to ten minutes reading an article about AI when thousands of other adorable pet videos are almost begging to be clicked on? Titles and ledes are often all the audience reads before moving on to consuming other media online. ChatGPT’s summary feature that allows people to condense information into a couple of short bullet points only makes it easier for people to not read things comprehensively. The quote I used above that I claimed was from ‘Professor Terweisch,’ then, could be easily mistaken as his actual words to the average reader reading my comment if they didn’t know any better.

Here’s a real quote from Professor Terwiesch. At the end of his fourth comment in the interview, he says, “If anything, I think that ChatGPT is going to be our friend.” This quote is so important because, if taken out of context and without thoroughly reading the rest of the article where he expands on this point, many people may take it to mean that ChatGPT is inherently a positive thing. Because of his quote that’s conveniently found only halfway into the article, readers may find the use of ChatGPT justified, and not bother to read the rest of the article.

Of course, this isn’t what Professor Terwiesch means. But it’s not what his intentions are that create a potent impact on audiences — it’s what information they can easily take and commit to memory without paying attention to the actual purpose of the article. This phenomenon is an example of a logical fallacy that is classically known as ‘appeal to authority:’ when people believe that the information they consume comes from a reputable source, they tend to not analyze what they are reading. (Sounds a little bit like a certain AI writing tool, right?) At the heart of it all, it’s most important for people to take information from an informed, unbiased standpoint.

From the perspective of someone who has taken the time to hear the words of Professor Terwiesch on the potentials of ChatGPT, I do agree with what he says. ChatGPT is great at generating ideas and (mostly) well-written content but fails impeccably when prompted to produce actual substantive material. I just wonder how many people will misunderstand the second part of Professor Terwiesch’s point, and will continue to use AI for less noble means.

Round 4: Potent Quotables

In Art or English class, I always stop and grin whenever there is a rubric criterion for creativity. Indeed, in the Wharton article above, Mr. Christian Terwiesch himself says it’s easy, having “two steps to it…You need to create and you need to select.”. Yet, when thinking of the different ways that creativity can be structured, I realize that “create and select” can be explored further. These two steps can be used to differentiate between two of my noted structures: one involving ChatGPT.

Imagine a balance scale. One end is labeled selection, and the other is creation. The more effort you spend on that step, the further that one end of the scale end tips down. When evaluating a structure of creativity through experience, I thought about which end would be lower, given a response to the prompt below.

Prompt: How would you structure a Wharton comment in response to [the quote given above?]:

The Draftist Method – Without chatGPT

I used a scale analogy to show how the steps in Mr. Terweisch’s creative process could be used to ‘compare the basic create-select process with and without chatGPT’. Previously, I thought that there were many possible structures – and there might be – but after a bit of trial and error, it all seems to boil down to create -> select. The only variations in this structure depend on repeating “create -> select” multiple times.

The thing is that drafting is representative of creation. Think about it being involved in the more famous version of creativity: the spur of brainstorming, implementation, and then the big X’s for “this doesn’t work” for each draft.

For example, I spent an hour structuring my drafts section to include each and every one of my previous major ideas. In the end, however, I went so off-topic, that my overall essay became a lot more confusing.

I ended up rejecting this particular form of draft and revised it: a yes or no selection. To erase an hour’s worth of progress, all I had to see was that the idea didn’t fit.

In these scenarios, too much effort was spent on creating and not so much spent on selecting. We can visualize how the balance of the scales shifts in response to whether efforts originate with ourselves or – as we shall soon see – originates with chatGPT always visualize a much lower end for creation.

II. The List Method – With chatGPT

What if most of the ideas were systematically available to you from the beginning? How will that shift the balance of the scales (Effort of Creativity versus that of Selection) Rather than coming up with new ideas (phew), we find that we can get additional assistance from ChatGPT itself. Given ChatGPT-generated recommendations, we can further contrast the relative degree of emphasis on Mr. Tweriesch’s “select” to “create”.

When asking ChatGPT the prompt above, I got 8 key points. They went as followed (rephrased for clarity):

1. Delve Deeper into Terweisch’s Process.

2. Look into Wharton’s Relationship with Creativity.

3. How does Technology Mix with Creativity?

4. Why Do Selecting Ideas Matter?

5. Balancing Quantity and Quality.

6. How Chat GPT Nurtures Entrepreneurial Brainstorming.

7. How can Diversity Enrich Terweisch’s Process?

8. How does Terweisch’s Process link to Innovation and Results?

We can see that a lot of work lies in selection! I would have to look at the choices it gave me, narrow them down, and then compare them to each other. Then I would then have to handpick the best one.

This was a difficult process for me, considering that I had to choose out of eight options, most of them worth exploring. However, my overall theme ended up being closest to number 1, after eliminating most of them due to unfamiliarity with these topics (such as entrepreneurship and diversity).

This led me to conclude that there is a separate type of creativity from what we see in drafting: one where the selection scale end is weighed down a lot further.

When referring back to the shift of the scale, ‘referring back to the shift of the scale’ ) we can form a quick picture of what each interpretation might look like. From the draftist’s point of view, the creation side of the scale is heavier, whereas, in the list, the selection (side) is. Thanks to Mr. Terwiesch’s “create and select” and my experiences, I realize that emphasizing one step can provide an interpretation different from the other. At first, this may come across as undesirable, since creativity is a word we typically use to elevate humans above mere computers. However, perhaps it is equally important to understand how that computers and AI are not possessed of the filtering mentality of humans, and so cannot meaningfully identify what matters to people’s senses of motivation and value when it chucks out algorithm-based responses.

I will end with the idea that while everyone feels like they should be the draftist, being part of the selective process can be just as ideal. ChatGPT is really a tool: a way to knock two steps with one, selection.

As a child, I used to observe the customers that came into my family’s store. I noted their purchases and the frequency with which they would get those items. I noticed the slang they used. I watched the way they spoke to my parents. Some showered them with warmth; others used not-so-creative ways to express their anger when there was a misunderstanding. A classic one was “Go back to your own country.”

My family store was filled with real-life examples of psychological theories. For example, the scapegoat theory came about often: when a child was caught stealing, they blamed their sibling for daring them to do so.

The brain is so complex, and it is always part of humans’ curiosity to understand themselves better. Yet, it is nearly impossible to understand every aspect of ourselves. A quote I came across one time that explains this is that “If the brain was simple enough that we could understand it, we ourselves would be too simple to be able to understand it.” Every event, emotion, and encounter with the forces of the world changes us. We are never one version of ourselves, not for as long as time is moving and the world is too.

In order to better understand the brain beyond what is taught in a 4-month long course in school, I enrolled in a research program called GSTEM. In this program, I worked with graduate students, scientists, and professors. The project is based on how schizophrenia can be studied through brain organoids, which are balls of neural cells that recreate the function of a brain.

By analyzing images of brain networks of multiple brain organoids put together, we have a better idea of what schizophrenia embodies within neuronal interactions. This quote that I selected, which asks us to come up with multiple answers, connects back to what we did in the lab. In order to craft an effective treatment for schizophrenia, we must collect a lot of strong data. Each piece of data is a part of the puzzle, essentially being a smaller answer to the bigger answer that no one has yet reached. Another common phrase used to describe this quote is ‘multi-pronged’ solution: not so much multiple individual solutions but simultaneous application of many partial solutions. In the end, these small conclusions from our hypotheses come together to formulate a strong answer as to what causes schizophrenia and how it can be effectively treated.

In GSTEM, we use trial and error. We think about schizophrenia in terms of the neuronal network, the culture of the cells, and the current problems with schizophrenia treatment. When Terwiesch suggests that we “generate multiple answers,” I also think about how there are multiple problems within one overarching problem. In my case, there are many issues with the current schizophrenia treatment: over-reliance on animal testing, lack of progress in schizophrenia treatment, and negative side effects. Animal testing has two main problems: the results are often non-translatable to humans and it is often not cheap. Schizophrenia treatment has not been majorly changed since its creation decades ago. The side effects that come from the current treatments include obesity and Parkinson’s, which are not worthy trade-offs for many. So, in our project, we took on the second part of Terwiesch’s advice: trying to challenge our thinking.

For our project, as a high school student, I challenged my thinking by looking more into the action potential process, which involves sending signals and messages throughout the brain networks. Calcium also plays a large role in depolarization alongside potassium and chloride. My mentors constantly asked me to think about why there may be decreased calcium levels in schizophrenia patients or try to think about why self-growing neural networks are better models to study schizophrenia with than rodent models. Rather than thinking inside the box and using what has been provided–mice for studying the brain and looking only into the actional potential process–I have been encouraged to look into the patterns of calcium activity in the brain. At Columbia University, where I was based, creative thoughts are encouraged through the architectural organization: not one building is dedicated only to one area of study. This is because unusual crossovers in fields of study lead to groundbreaking discoveries.

In order to hear opposite points of view, including why people study schizophrenia is important, why mental disorders exist, and whether or not they are real, we need to look into data collection. To the human eye, schizophrenia isn’t like a bruise; one can’t see its damage–only its effects. The delusions and hallucinations that people have can be seen, but a neuroscientist can’t confirm or trace them back to the cause. With a bruise, you can explain that you were hit by a ball.

Just because schizophrenia isn’t physically noticeable in front of my eyes, it is still there. Air is not easily seen, typically when walking around, but we all know it is there. So, when we are crafting together a study, we have to look at the study from all perspectives to best clear up confusion and present it easily to a broader audience. Data collection is what helps the opposing side see our side, and it also gets us thinking about the other side. We think of why they have these questions, and why they are perfectly justified. In the world of academia, it is best to not have any stone unturned, so all thoughts are encouraged. These thoughts may even help strengthen the research study and its results.

While I expected GSTEM to embody a true laboratory experience (which it did), I did not expect the other implications: having to think critically, gaining new perspectives, and being okay with having many possible answers and theories. It is better to be well-versed in terms of knowledge and awareness of the audience–that is what I’ve learned is important as well. As I present my findings, I hope to utilize the important speech skills I’ve gained over the course of the program and use them to effectively present my unique yet cutting-edge findings and conclusions to a crowd that shares varying views on what schizophrenia is.

The introduction of ChatGPT by OpenAI in November 2022 has captivated many due to its remarkable generative artificial intelligence capabilities. This AI technology, fueled by its ability to generate comprehensive essays and formulate complete ideas based on prompts, is causing a stir in the education community at all levels. Educators and institutions are grappling with how to handle and possibly harness the transformative potential of ChatGPT. Questions arise regarding academic integrity and the future of individual thought and creativity when students can easily generate AI-written responses to assignments. Professor Christian Terwiesch, a pioneer in researching ChatGPT’s capabilities, embarked on a study to evaluate its performance in a typical MBA course exam. His findings showed that ChatGPT excelled in answering simpler questions but struggled with more complex ones, requiring human guidance. Despite its proficiency, ChatGPT poses questions about the evolving role of technology in education and assessment. Terwiesch envisions a future where ChatGPT and similar technologies facilitate learning rather than replace it, encouraging students to use these tools to explore ideas and perspectives, ultimately enhancing critical thinking and creativity. The impact of generative AI extends beyond education, potentially revolutionizing various industries by meeting diverse needs through innovative applications, from healthcare to architecture, ushering in a new era of problem-solving and efficiency. As technology continues to connect customers and businesses, the challenges of managing vast amounts of data and information become apparent, and the role of AI in addressing these challenges remains a captivating area of research. Terwiesch anticipates AI’s potential to enhance the productivity and capabilities of professionals across domains, from teachers to doctors, ultimately benefiting those in need.

It was refreshing to read a perspective so different to one offered to me at an Indian high school. Professor Terwiesch gives us an insight on how generative AI can be helpful and increase productivity with its ethical usage in the near future. Teachers in my school would associate ChatGPT with everything unethical- cheating, loss of creativity, over dependency on technology that would lead to a paused critical thinking mindset. Although I believe that some of these concerns are true, my opinion (and you can take it with a grain of salt) is that AI is as revolutionary as the invention of cars almost 140 years back and the launch of computers almost 200 years back- BIG, REVOLUTIONARY, and capable of MOVING MOUNTAINS. Since, AI is capable of changing the world, we should adopt it in the small practices we do today. I was working with my dad in his stock broking firm this summer and my search for a good teacher (after my father exhausted his limited prowess as a teacher) led me to opening my phone and clicking a picture of a straddle chart for a NIFTY option and posting it on the all new ChatGPT 4.o- I never stopped after this, the well explained detailed responses given by this AI blew my mind, I cross questioned it and made it explain to me things that I probably never would have understood with my dad or would have taken me hours of reading and researching to understand. I used AI to answer my questions and I sat with these concepts and my dad to develop a deeper understanding of the same, this increased my capability to talk to my dad about the stock market at a level a little bit closer to his ( a 30 year experienced trader). This is why I believe that AI is not something that should be looked upon as a tool that makes us lazier but one that makes us more efficient. From this stems another discussion of if AI will lead to loss of jobs, and I feel that if individuals keep upskilling themselves, use their creative abilities and have the ability to learn new skills quickly (just like geniuses who are adopting AI to make life easier- que to check Styl App- the Tinder for clothes by Dhruv Bindra).

( taking a risk by mentioning this, but I have used chatgpt to edit and correct this comment- no more expensive Grammarly subscriptions.)

“It’s doing your homework for you!” I exclaimed, sitting across from my senior who looked at me with a placid expression as he observed my frantic reaction to a tool generating his history homework for him.

That was the first time I came across ChatGPT, I was evidently taken by sheer disbelief. After that, and a brief explanation, started quite an eventful journey of learning.

I was immediately taught to be averse this technology by the teachers at my school, who labelled it as lethargy’s partner in crime. While my Indian high school was all for technology and change, ChatGPT promptly appeared as a threat to student creativity and idiosyncrasy in the eyes of my teachers. Initially, I believed them. I saw students in my class use the AI tool for the most minor inconveniences, putting in not a single ounce of effort into their schoolwork apart from typing in the prompt. It was vastly demotivating for me to see the praise unoriginal work was harboring while the AI “infiltration” went unchecked. It was extremely discouraging to work much harder than my classmates for that effort to be unheeded. I think this was when I started detesting ChatGPT. A few months along I decided to try out just what was so magical about this Artificial Intelligence revolution. I found it massively invigorating how ground-breaking the technology seemed, which is when I took to Google to figure out its working mechanism. AI models are trained on sets of data and while data is magic for most my finance buddies, it’s simply a past record- and most definitely not an emblem of creativity and innovation. However, I did realize how AI generated prompts and “ideas” were an impressive way to start building your own ideas, and perhaps even developing on those generated for you as I used the tool more frequently. Reading about a similar thought in this article was extremely relatable and revitalizing, considering how I’ve spent most of my ChatGPT journey witnessing students use the tool unethically as opposed to using it as something to develop their own learning process.

According to a Forbes article dated 28 January, 2023, 89% of survey respondents report that they have used the platform to help with a homework assignment. In my opinion, writing an essay using ChatGPT is entirely unethical. While ChatGPT could be a great tool for students to master, considering the colossal growth in the AI market- and how knowledge of machine learning could be an excellent asset to a 21st century resume- it is also something hindering student progress at the school level. By generating essays for students, it is bringing their engagement with content to a new low. Personally, I believe that another reason this is unethical is because of how unfair it is to other students who honestly did their work. Research gaps and plagiarism concerns still persist among other problems like misunderstanding of contexts and biased data. Lastly, being a student interested in writing and literature, I firmly believe that writing involves a personal touch- a level of idiosyncrasy and characteristic sentiment evolved through your experiences that is simply unmatched and definitely cannot be replicated by AI. In my opinion, the solution to this conundrum is finding a balance. For instance, I’ve asked ChatGPT to explain a lot of finance concepts to me that are typically meant for college students. By asking the AI to explain the theories to a 14-year-old, it elucidated the concepts and explained them to me using relatable examples. On a larger scale, the strengths of the AI tool can be leveraged to make learning more personalized and can even assist teachers in designing effective and engaging teaching outlines and lessons. A teacher of mine actually told me about how she efficiently used ChatGPT to come up with conversation starters to introduce new lessons in the class. In retrospect, the ensuing conversations were actually massively beneficial to the classroom- fostering a newfound interest for the subject in students who generally disliked it.

In conclusion, ChatGPT and other AI models are indubitably trailblazing, easing processes in various fields. While their generative prowess is lauded by people around the world, its idea generation is vastly ameliorated with human development and intervention. At the end of the day, it’s a fascinating tool if used ethically and to better one’s own skills than to capitalize off of technology. I’ll seal this comment off with a quote from ChatGPT itself. When asked what fields it isn’t as useful in, it said, “While I can generate creative content, there are limitations to my creativity and originality compared to a human.” This stands a true testament to the unmatched innovation, individuality, and ingenuity that is perhaps only characteristic to the human race and its phenomenally unfathomable mind.

Since November 30, 2022, when ChatGPT was first launched, it has always been an intriguing and popular topic for students, teachers, and companies to discuss. However, ongoing debates on whether ChatGPT will “leverage people’s productivity and their capability” or pose risks on ethical standards and lead to unstoppable dependency on AI have remained central among experts and the worldwide public.

As a student who have just completed my IGCSEs, I firmly agree with Dr. Christian Terwiesch’s opinion, and I’ve also been doing this all the time on using ChatGPT to “generate multiple answers and try to challenge your thinking.” As myself, I’ve seen ChatGPT as offering numerous learning opportunities and serving as an invaluable and personalised research and instant explaining tool on a wide range of topics, making knowledge more accessible for students than ever. However, when I’m using ChatGPT to brainstorm some ideas for a project or essay, I usually do something in advance before finding “the one that resonates with you.” I first list my original points and ideas, then type in my questions on the ‘message ChatGPT’ bar to gain more in-depth knowledge and foster further development. I find this extremely useful as it helps me to challenge my thinking as well as learn and understand the new contents, but at the same time, still in line with my original thoughts. I often start a discussion with ChatGPT on my thoughts and insights, and ask for ChatGPT’s perspectives, then hear the opposing points of view. Like a debate! In our IGCSE economics class, our teacher often tells us to embrace ChatGPT when studying and revising for economics. I particularly enjoy ChatGPT’s assistance when answering 9 and 12 marker questions. When I first started learning the course, despite covering all points, I sometimes lost marks and struggled to improve my answers. But ChatGPT provides a model answer that enables me to refine my structure and gives me explanations on how I could further improve.

Using ChatGPT as a “cheap writing tool” is undoubtedly unethical, but to what extent should we set the rules to? Completely banning the use of ChatGPT or establishing guidelines that we should all follow? I would definitely choose the second option. Each time when writing an IGCSE coursework or competing in a competition, I have noticed that there will always be a section called ‘Rules and Code of Conduct’ clearly labelling the DOs and DON’Ts when using AI. The ‘DOs’ will always represent proper citation and bibliography when handing in the essay to prevent plagiarism or other forms of cheating. While the ‘DON’Ts’ represent how students shouldn’t submit any work generated by AI, as Dr. Terwiesch’s experience “there were surprising mistakes” especially in solving mathematic problems. A funny experience I had was every time I ask ChatGPT for help with my maths question, for example, it might explain to me the answer should be A, however, when I ask back “Why shouldn’t it be B?” and given my explanation for it, it will always tell me “Sorry, that’s right. The answer is B.” But if I say option C instead of B, it will still give me the same response. I think using ChatGPT as an efficient research and brainstorming machine will considered to be ethical, yet, if you pass over this extent, it will considered to be unethical which you will regret for a lifetime.

I believe that generative AI will not only ease the information burden in the healthcare industry but also make a strong impact in the education industry. For instance, Khan Academy has already begun integrating AI into their lessons to personalise learning experiences for students all over the world. By creating their unique AI tutor – Khanmigo, the platform can customise educational resources into individual learning styles, contents, and paces, providing instant feedback and support. Not everyone is fortunate enough to receive a wonderful education, however, this AI technology can help bridge the education gap, allowing greater numbers of people worldwide to enjoy an outstanding learning opportunity.

If we can correctly follow the rules when using AI technologies, we can enjoy the invaluable opportunities, innovations, and advancement it offers.