AI and You: Learn to Be the Human in the Loop

When considering the evolving human-artificial intelligence (AI) relationship, which conjunction best captures the essence of this connection? Humans AND AI or Humans OR AI?

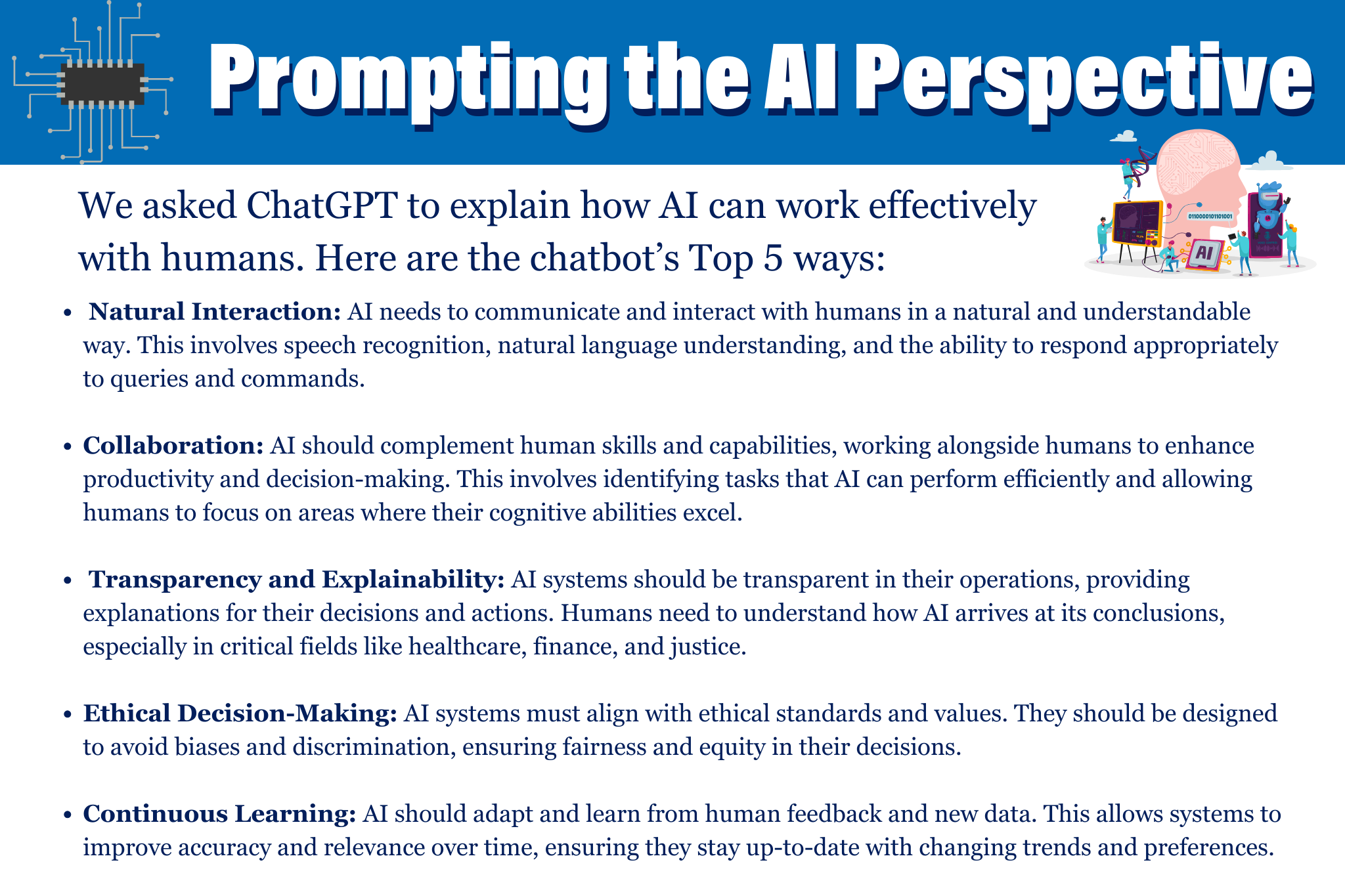

This seems to be on lots of people’s minds as generative AI apps like ChatGPT, Bard, Dall-E2 and others become more sophisticated in generating content and images. Are machines replacing us? How do humans fit into the AI revolution? What are the most effective ways for humans to collaborate with AI systems?

The AI-Human dynamic has been a hot topic in Wharton Global Youth Program’s Cross-Program Speaker Series, during which Wharton School professors present lectures to some 300 high school students participating in summer business programs on the Wharton campus. Speakers highlight their research and engage our global youth in the latest cutting-edge business and finance topics.

That sometimes means getting a glimpse at actual business cases. Lynn Wu, a Wharton associate professor of operations, information and decisions who studies the implications and influences of technologies on business, has analyzed the human-AI dynamic in companies like DHL, a global logistics business specializing in international shipping, courier services and transportation. Her research often considers the broader context of AI (beyond ChatGPT), combining computer science and large datasets to enable problem-solving.

A Human-Augmentation Tool

According to Wu, DHL used data analytics — the process of examining data to make decisions — to improve how it shipped goods across different parts of the country and the world. The company captured data from its business processes for eight years to study the problems, and then waited three more years before using machine learning – building algorithms based on the data – to make improvements. The deep data collection and analysis were ultimately very telling about AI’s ability to advance operations.

“Having a human-machine collaboration is a new way to organize firm activities. That’s where you guys come in. You’ve got to figure out how we marry machines and humans in a new way. That is the future of our economy.” –Lynn Wu, Wharton School Professor

We shouldn’t just hand all problems off to AI, concluded Wu. “DHL found that AI systems never got it right entirely,” noted Wu. “Humans always had to monitor what was going on because machines can’t solve many of the important edge cases – things on the edge, on the border, unusual events. The edge stuff matters a lot, and machine learning is not good at edge cases. Humans had to monitor that and teach AI about how the edge cases went wrong. Through human-machine collaboration, DHL was able to significantly improve the efficiency of loading palettes onto their cargo planes and cargo trucks. Key to this process was a continuous feedback loop, where humans improved on something, AI learned from it, and then told humans what else was important.”

Wu’s research into DHL and other companies suggests that AI can improve processes but is not always ideal for radically new situations. “The core of all of it is that you’ve got to think about AI as a human-augmentation tool,” said Wu. “It’s not a replacement tool or a substitution tool. AI can do some things really well, but it can also do some things wrong. Humans need to be in the loop. And having a human-machine collaboration is a new way to organize firm activities. That’s where you guys come in. You’ve got to figure out how we marry machines and humans in a new way. That is the future of our economy.”

We Are In Control

Your job will likely get more interesting with AI, said Ethan Mollick, an associate professor of management at Wharton and academic director of Wharton Interactive. He believes that people should stop thinking about AI, in particular generative-AI technology that can answer prompts by generating full written essays and artwork and formulating complete ideas, as “overtaking” the jobs that humans have.

“I think there’s too much focus on the idea that overlap with AI means your job is gone,” said Mollick, who recorded a “Practical AI for Teachers and Students” video series on YouTube, and has written a number of articles on his Substack page to help people navigate the rapid changes brought on by ChatGPT and other generative-AI apps. “You want to be in an industry where you are heavily exposed to AI and you want to work with AI to do your job. Marketing, writing, a lot of the kinds of tasks that you guys will do are very affected [by the technology]. Professors are very affected. But that doesn’t mean my job gets worse because it’s affected. It means I get to use AI tools.”

Mollick, who spends a lot of time studying the humans AND AI landscape, encourages people to stop thinking that AI is something that is happening to them. “We have control over AI, and we get to decide how to use it,” he noted, adding that he also feels government regulation of the technology is important.

Rather than focus on “the extinction scenario” where AI takes over the world (Humans OR AI), he shared a few best practices for people to follow as we figure out how to live and work with AI:

✅ Invite AI to Everything

“As long as you’re being ethical about it, you should be using AI in everything you do.”

✅ Be the Human in the Loop

“You prompt AI best by giving it bullet points and stuff to work with. I would say, for instance, ‘write an essay with the following points,’ and then I would feed it the points. And then I would go in and say change paragraph two or I would totally rewrite it myself. You want to figure out how to become the human in the loop and work with the AI to do better things.”

✅ Tell It Who It Is (and Treat AI Like a Person)

“The biggest issue with “getting” AI seems to be the almost universal belief that, since AI is made of software, it should be treated like other software. AI is more like a person in how it operates and does a good job with writing, analysis, coding and chatting. Treat it like a person, give it feedback like a person, and teach it like a person.”

✅ This Is the Worst AI You Will Use

“Once you guys are in college or graduate college, AI will be changing almost every job. You need to figure out what that future looks like for you. When we think about jobs in the modern economic sense, we think of them as bundles of tasks. And these jobs aren’t one thing you do, they’re a lot of things you do. AI is going to take away some of those tasks — a lot of the boring parts of your job. Say you don’t like filling out expense reports. AI can do that for you. I think you are going to be handing off the worst parts of your job. I don’t need you to change your career path based on this. But you should be aware that this AI thing is real and it’s going to affect lots of jobs, especially jobs that Wharton MBAs and undergraduates tend to go into, like consulting. So, keep an eye on it.”

Conversation Starters

Conversation Starters

Professor Lynn Wu says, “The edge stuff matters a lot, and machine learning is not good at edge cases.” What does she mean by this and how does this underscore the importance of human-AI collaboration?

Do any of the insights in this article contradict what you’ve been told about AI. For instance, how do you feel about Professor Ethan Mollick’s declaration to “invite AI to everything?”

Have you had any run-ins with AI that made you question the AI-Human dynamic? Describe your experience in the comment section of this article. What conclusions did you draw from this experience and how might it inform your own future with AI?

Comments are closed.

Is coding or CS experience required to take full benefit of this summer program?

In the realm of machine learning, Professor Lynn Wu’s insight into the significance of addressing edge cases resonates deeply with my experience in a recent project on semi-supervised learning. In this project, we delved into the dynamic landscape of leveraging both labeled and unlabeled data. Unlike traditional supervised learning approaches that rely solely on labeled data, semi-supervised learning recognizes the importance of incorporating unlabeled data to enhance adaptability and performance.

This approach becomes particularly pertinent when dealing with edge cases—those instances that defy conventional categorization. By integrating unlabeled data, our semi-supervised learning model showcased a capacity to navigate and learn from scenarios that do not neatly fit into predefined categories. The synergy between labeled and unlabeled data allowed for a more nuanced understanding of complex, real-world situations, echoing the need for machine learning systems to be robust in the face of diverse and outlier-rich datasets.

In my reflections on this project, I am inclined to underscore the parallel between our semi-supervised learning strategy and the call for human-AI collaboration. While machine learning algorithms excel in pattern recognition and processing large volumes of data, the inclusion of human intuition and expertise remains pivotal, especially in scenarios where the data landscape is intricate and unconventional.

I completely agree with your perspective on Professor Mollick’s advice to “invite AI to everything.” AI has practically infinite potential, but it is important to view it as a tool that helps decision making rather than one that replaces it. Your work with the recommendation system shows this perfectly. While AI is brilliant at processing extensive data and spotting patterns, the nuanced understanding and context-specific insights that humans bring are irreplaceable. This balanced approach allows us to harness AI’s capabilities without losing the invaluable human touch.

Your insights into the potential risks of over-relying on AI are spot-on. When not used properly, AI can be somewhat unsettling. Misapplication or excessive dependence on AI can lead to significant issues, especially when dealing with unique or unusual scenarios that require human judgment. People like you, who work to keep AI in check, are crucial to the future of the technology. Ensuring that these programs bolster our society without taking away its liveliness is paramount. This allows us to use this powerful technology responsibly and effectively.

Your point about the future significance of AI proficiency stand out to me. As artificial intelligence becomes an increasing important aspect of our society, those who work well with these systems will have a colossal advantage in the business world. These people will have a consistent leg up in a landscape that will constantly change. As uncertain as our future is, AI’s prevalence is almost a sure thing. By treating AI as a powerful ally, one that still requires human guidance and oversight, we can unlock its true potential. This balanced approach, where human insight complements AI’s capabilities, is what will drive future success in our rapidly advancing economy.

Professor Ethan Mollick’s recommendation to “invite AI to everything” resonates with the notion of harnessing AI’s strengths across diverse domains. However, my personal experience the importance of recognizing AI as a tool rather than a complete replacement for human decision-making.

In a specific instance, our team was developing a recommendation system using advanced AI algorithms for a complex project. While the AI excelled in processing vast amounts of data and identifying general patterns, it faced challenges with nuanced and context-specific scenarios. As the super semi-supervisor, my role became crucial in providing guidance, interpreting unique cases, and ensuring that the AI’s recommendations aligned with the project’s overarching goals.

This experience highlighted the need for a strategic collaboration between humans and AI. While AI can analyze data efficiently, human decision-making brings contextual understanding and the ability to navigate intricate situations. In this scenario, the super semi-supervisor role became pivotal in steering the AI towards optimal outcomes by complementing its strengths with human insight.

The key takeaway is that inviting AI to everything should be a thoughtful and strategic endeavor, recognizing the symbiotic relationship between human expertise and AI capabilities. Rather than viewing AI as a replacement, its integration should be guided by a nuanced understanding of where AI excels and where human judgment remains irreplaceable.(ISSN:0277-786X)

I totally agree with your opinion on Professor Ethan Mollick’s declaration to “invite AI to everything: You should be using AI in everything you do.” AI is a clever machine that can do our jobs, but I think it can’t be a replacement or substitution tool, as Professor Lynn Wu said. AI can do something wrong, and it doesn’t have the power of intuition that humans have.

Your comment underscores the importance of recognizing AI as a tool to enhance human capabilities rather than replace them. Additionally, your personal experience highlights a critical aspect of AI’s limitations: the lack of intuition in dealing with nuanced and context-specific scenarios. This aspect reinforces the necessity of human oversight to interpret and guide AI outputs, ensuring they align with broader project goals.

While AI is effective at processing data and identifying patterns, it struggles with unique cases that require human insight and contextual understanding. This aligns with the broader view that AI excels in data-driven tasks but still relies on human judgment for complex decision-making. The human role as a semi-supervisor is crucial in bridging this gap, demonstrating how human expertise can complement AI’s capabilities to achieve optimal outcomes.

Agreeing with your last key takeaway, rather than using AI in everything we do, I think the most important thing is to have a harmonious human-machine collaboration to bring about efficient and rational results.

I totally agree with your opinion on Professor Ethan Mollick’s declaration to “invite AI to everything: You should be using AI in everything you do.” AI is a clever machine that can do our jobs, but I think it can’t be a replacement or substitution tool, as Professor Lynn Wu said. AI can do something wrong, and it doesn’t have the power of intuition that humans have.

Your comment underscores the importance of recognizing AI as a tool to enhance human capabilities rather than replace them. Additionally, your personal experience highlights a critical aspect of AI’s limitations: the lack of intuition in dealing with nuanced and context-specific scenarios. This aspect reinforces the necessity of human oversight to interpret and guide AI outputs, ensuring they align with broader project goals.

While AI is effective at processing data and identifying patterns, it struggles with unique cases that require human insight and contextual understanding. This aligns with the broader view that AI excels in data-driven tasks but still relies on human judgment for complex decision-making. The human role as a semi-supervisor is crucial in bridging this gap, demonstrating how human expertise can complement AI’s capabilities to achieve optimal outcomes.

Agreeing with your last key takeaway, rather than using AI in everything we do, I think the most important thing is to have a harmonious human-machine collaboration to bring about efficient and rational results.

Professor Ethan Mullick’s points about AI were made to benefit human users, by implementing AI into daily human tasks, or businesses. But, the problem is that using AI in many situations in the ways he says in the points might not end up in good ways.

My experience using AI in some of my tasks didn’t go well, due to the fact that AI is flawed, and even if you can work with it, and change some of the things it brings to the table, it doesn’t work out. For example, writing essays. When I gave AI prompts to follow, such as topic, maximum word count, or the amount of paragraphs, it will always leave at least one item out, leaving me with nothing of worth from the AI. Yes, it can take in information and give a result very quickly, but it often results in something that still needs work. It is often better to write essays with your own hands, rather than leaving it with AI.

But, even if there are these drawbacks, AI is alright to use as long as it is under human supervision and revision. AI should not be seen as a replacement to human work, but as a partner, working with humans to create a work.

As a high school student, AI is something I deal with both in and out of the classroom almost every single day, whether I need it to help me generate ideas or solve a math problem. AI’s effect on my own life has been increasing by the day and the problem of how AI should be affecting our lives becomes more and more important.

As a child, I loved sci-fi movies such as I, Robot that included smart, well-designed robots and artificial intelligence. I’ve indulged myself in learning about self-driving technology, machine learning, and problem solving AI. With the release of ChatGPT, life felt surreal for some time. Knowledge that I would’ve had to delve into deep research to find more of was at my fingertips. I felt one step closer to being like Iron Man and having a personal AI assistant at all times.

However, AI is becoming more and more powerful in many cases. Tesla is closer to Level 5 autonomy than its ever been. OpenAI recently released GPT-4o, an even more advanced AI system. It seems as if we aren’t far from the setting painted in I, Robot: a world where robots take care of everything, from technology to household chores, and most humans have been pushed into the backdrop. With this future as a possibility, the AI-Human connection should be a cooperation, and cannot be a AI dominant situation. Take ChatGPT, for example, which started off as an unreliable source, and is now one of people’s main accesses to information, with voice bots being able to put sound to text. Humans will soon not be required for information access. Libraries, professors, teachers are all professions at harm. Automation in factories will make millions of people go into unemployment. Developers and scientists, as well as politicians, should set limits to control the extent of which AI can influence the world, as well protect the people who use it on a daily basis. This contradicts Professor Ethan Mollick’s declaration to “invite AI to everything.” AI cannot and should not be incorporated into every thing. Most things should be led to real humans, such as war machines, teaching, and art. AI is smart and resourceful in the way that it is now. It will be more harmful than beneficial to allow AI in every aspect of life and let a highly advanced AI run the world.

If you ask my band teacher, he’ll describe our concerts as entertaining. Not mindblowing. Not spectacular. Entertaining. My school’s band program, an average program from a below-average middle school, isn’t exactly capable of creating the sounds that echo from Carnegie Hall. There is always room for improvement for any musician, but for us, perhaps the room is more like a master bedroom. But what if we incorporated A.I. technology into our concerts? Could we finally be above average?

In just these past few months, A.I. technology has transformed the modern world into something brand-new. As a 13-year-old classical music connoisseur, I often ponder how A.I. technology could reshape this timeless music genre. Classical music’s emotional depth and intricate compositions have captivated listeners for centuries. Streaming platforms and online databases make classical music accessible globally. Technology also allows composers and performers to experiment with new sounds and instruments, enhancing classical compositions. However, with the A.I. industry still on the rise of constant development, who knows what technology will do next? Classical musicians’ artistry and craftsmanship must guide the use of technology as a tool, not a replacement.

Although using A.I. to cheat our way into sounding like a professional band sounds sweet, there’s something about the true efforts of young musicians that sounds even sweeter.

The AI revolution has hit. From the emergence and rapid integration of generative AI models like ChatGPT and Gemini, many people find AI turning into a part of their everyday lives. This, however, also leads to an argument of the future- whether Ai will replace us or whether we will be able to safely harness its innovation. One such argument is from Professor Mollick, pushing for AI adaptation and overshadowing fears with positivity. On the other hand, many parts of the population fear for their future as AI threatens work stability through its revolution.

This very standoff is something I can even find in my everyday life. The argument of the future of AI is present at the smallest scale, within my very school. With the introduction of AI models like ChatGPT, many students find it easier to write essays and find topics during projects. These new tools frighten some of the teachers, banning AI from the classroom and representing the argument of AI extinction and fearing for the replacement of humanity. On the other hand, however, some teachers embrace AI, teaching us to properly handle these complex intelligent models and be the human in the loop, representing another future where humans and AI work together to further innovate. Throughout the months, I even find myself starting to integrate AI in order to create better quality work and am always surprised by the level of its growth.

It is without a doubt that AI provides many uncertainties for the future, but I believe the way we handle it is entirely up to us. We can choose to harness the capabilities of AI to an innovative future or choose to stunt our innovation in fear.

Professor Wu and Professor Mollick both suggest that AI is a great tool to complement human intelligence in the workplace. There are some aspects of being human that AI cannot replace, so we can look to AI as a tool, not a potential threat to our jobs. However, if my generation wants to efficiently use AI tools in the future, we need the opportunity to play around with AI. Despite its potential, AI use in schools is actively discouraged and can even be considered cheating.

In my freshman year language arts class, our teacher had us spend two weeks of in-class instruction time to complete an essay because she didn’t want us working on our essays at home where we could use AI. Using AI was considered cheating. When a few students took their rough drafts home, our teacher was furious; she made everyone write the entire four-page paper by hand. In school, the use of AI is strictly prohibited, and teachers are using preventative measures to stop AI from entering the classroom. However, if we are to need AI in our future careers, how will school prepare us for the future?

Recently, I attended a district-level technology conference with other students, teachers, parents, and administrators where we provided our own viewpoints on how AI should be used in the classroom, or if it should be used at all. Professor Mollick suggests that we should “[use] AI in everything [we] do” because “AI will be changing almost every job.” However, an overwhelming majority of the adults were against the use of AI in the high school classroom. Most of them fear that students would lose the ability to think for themselves if AI technology was allowed.

Students tend to hand problems off to AI without really knowing what’s going on. As Professor Mollick suggests, if we correctly prompt AI, we can work alongside it to complete tasks more effectively. However, many students are blindly following AI suggestions, even if they do not fully understand their task and the AI response. On the other hand, many adults will think through what AI is giving them, and they know to take AI responses with a grain of salt.

Collaboration with AI is a critical skill that needs to be taught in school instead of avoided. Parents, teachers, and administrators alike discourage AI use in schools because they question the AI-human dynamic between AI and inexperienced teenagers. Many teenagers cannot use AI properly, for they blindly follow AI instead of understanding the content. Yet, if we are not taught these essential skills, and even discouraged from using AI altogether, how will we ever learn?

I would like Professor Wu and Professor Mollick to address the issue of using AI ethically in schools to enable students to learn how to use AI tools for their future careers. In my experience, AI is often looked down upon in the school system. We should change our negative perception of AI and reevaluate its place in education. We need to embrace new technologies by learning about them instead of avoiding them.

Zimeng,

I first want to thank you for providing a strong claim about Ai usage in the classroom. Your emphasis on the importance of training students in a way that they can get used to AI usage was highly relatable as a highschool student who would be living in an AI-driven world in the near future. However, I feel that, while exposure to AI is becoming increasingly important, incorporating technology into everyday classrooms poses significant risks to learning quality and creativity development, especially among teenagers.

Certainly, integrating AI into schooling has advantages like increasing productivity and helping students solve complex questions. However, it’s critical to understand the possible risks associated with overusing AI in educational environments, which can perhaps be greater than the merit derived from bringing AI into classrooms.

Most significant worries are that depending too much on AI may damage the quality of learning and creativity. According to a study led by Muhammad Abbas at the National University of Computer and Emerging Sciences in Pakistan found that university students who extensively used AI tools like ChatGPT exhibited weaker academic performance compared to those who did not rely heavily on these tools. Students who excessively relied on AI tools showed an increase in procrastination, memory loss, eventually leading to a lower grade compared. This suggests that although AI tools can provide instant responses, it interferes with the process of acquiring deeper comprehension, leading to lower grades.

Another study published by researchers from the University of South Carolina underscores that children who use AI technology in studying on a regular basis often result in excessive dependency to these techniques, making it hard for the children to study on their own without the aid of Artificial Intelligence. This eventually leads to a decline in creativity as they become incapable of drawing conclusions independently.

These results show that although AI can make the learning process easier, using it can bring about bigger side-effects, lowering the intellectual capacity of students and damaging their creativity.

While I agree with your idea of providing highschool students with AI usage opportunities, as they are about to face a world deeply intertwined with AI, I believe incorporating it to highschool classrooms is too risky. As an alternative, I strongly suggest conducting mandatory classes about AI where students can learn about AI technologies, their applications, and how to use them responsibly. This would teach students how to use AI technology in a proper manner, reducing the risk of students going through downfalls addressed earlier. Another approach is loosening AI policies for college students instead of highschoolers. College students are better suited to handle the complexity of AI since they are more mature and have superior critical thinking abilities. Additionally, they are offered with more complex duties that are suitable for AI usage than highschoolers. Given the above, this could enhance students’ comprehension of AI while posing less of a risk compared to your suggestion.

In conclusion, while AI holds significant promise for transforming education and preparing students for future careers, its integration must be handled carefully. While you highlight the potential of AI as a tool for human augmentation, educators must ensure that its use in schools does not undermine the development of critical thinking and creativity. By encouraging AI literacy through specialized courses and encouraging responsible AI use in higher education, we can take advantage of AI’s advantages while preserving the standard of education. This balanced approach will enable students to thrive in an AI-driven future while retaining the essential human elements of learning and growth.

Hey Zimeng, thank you for sharing such a thought-provoking story and perspective. Your experience, unfortunately, is not unique to you or me but is a universal uneasiness—the feeling of being forced to go slower in this fast-changing world.

I completely agree with your take that utilizing AI is a pivotal skill that deserves to be taught in schools. But as much as I relate to that notion, we also need to confront the bland truth: systemic change doesn’t move at the speed of innovation. It moves at the speed of caution, which takes time, and sometimes, a lot of time. Since the release of ChatGPT in 2022, when the mass public was first introduced to AI, this technology has been enhanced at a pace unlike any other creation in human history.

Consider the calculator, which is one of the most sensible tools to compare with AI in the educational environment. It already took an entire decade before the National Council of Teachers of Mathematics officially recommended calculator use in classrooms. That is for a notably less revolutionary technology. Now, we’re talking about something that can not only assist but also write, code, analyze, summarize, and think, all on its own. The scale is simply unprecedented.

Given that context, the right question, I believe, should be: do the benefits outweigh the consequences when we rush to adopt AI in education?

While AI can be an incredible learning ally, it can also cause regression in the educational system, which has been refined and tuned for hundreds of years to prioritize learners’ active thinking. thinking. Researchers around the world have already sounded the alarm: high usage of ChatGPT correlates with reduced mental engagement, particularly in cognitively demanding tasks like writing and critical thinking. As you’ve already addressed, ‘Most of them fear that students would lose the ability to think for themselves if AI technology was allowed.’ That fear, disturbingly, isn’t unfounded. It is, rather, backed up by evidence from various studies.We do not yet have a functional framework for AI implementation.

The teachers, for the fact that they cannot fully understand such a technology nor distinguish whether it is properly used, are not willing to take the evident risk. As the boundary between assistance and complete offloading cannot be drawn clearly, the advantages of AI are at the high likelihood of backfiring—blunting students’ minds instead of sharpening them.

This view merely serves as an introduction to the deeper motivations behind educators’ decisions. This is an explanation, not an endorsement, nor should it be. Hence, you do not stand to be corrected, as I completely support the notion that we should not be discouraged from using AI entirely but rather be taught how to use AI efficiently without damaging our cognitive skills. We are entering an era where this technology is omnipresent and where the job market, or even the society in general, is the battlefield between one’s capabilities of utilizing AI to its fullest. Diana Drake clearly stated this: “AI will be changing almost every job.” The educational system might not be to blame for now, considering the short timespan given, but if these trends continue for the next few years, they could certainly disadvantage many individuals.

Still, there’s ground for us to be positive. Universities across the globe, from California State to Stanford to the University of Sydney, are starting to embrace AI in education. They might not be perfect yet, but they are signs that those holding power are taking steps to integrate this powerful technology into the system.

Thank you for offering me a valuable chance to delve deeper into an emerging problem of this sort. The world is changing, our approaches to problems are changing, and so is the educational system. It may not be at a satisfactory rate yet, but the future is indeed promising. In the meantime, it would be advisable for us to learn how to use AI effectively, regardless of the medium being online courses, blogs, or YouTube. Opportunities to move forward, when sought, are still lying around.

Thank you, Diana Drake, for the enlightening article on understanding the critical role that humans play in developing AI. I particularly enjoyed the various input from experts. Katy Cook;s emphasis on the need for human oversight in AI development and Kelly Jin’s explanation of the transformative impact of diverse teams were two different but equally important perspectives. I thought both of these opinions significantly enrich the discourse on AI and highlight the importance of human influence in AI development.

This article is very relevant to my current research project. As part of my high school’s graduation requirement, I am required to write an extensive research paper on a topic of interest. I have always been intrigued with AI and advanced technology. In order to explore my interests further, I decided to conduct in-depth research on how AI is reshaping the marketing industry. Even though I started a few months ago, I have already discovered major changes in the industry, including the innovation in data analytics, personalized marketing strategies, and automated customer interactions. Overall, my project aims to explore how these advancements are currently being utilized and how they may be exploited in the future.

During the course of my research, I decided to experiment with AI-generated social media content. By using tools like Canva, Google Bard, ChatGPT, and InvideoAI, I allowed artificial intelligence to create whatever content it wanted. Initially, I had high expectations that AI could effortlessly create viral content in the blink of an eye. However, my experiments quickly revealed the limitations of AI in understanding human interests. The videos produced lacked the creativity and originality needed to capture an audience – they were overly long, lacked engagement, and failed to generate views and likes. To me, the experience was a sharp reminder of how necessary human creativity is to successfully shape artificial intelligence.

I have no doubt that I can generate much better content than the AI tools were able to. As a regular social media user, I have an inherent understanding of what captivates and engages audiences on various platforms; this includes understanding the subtleties of audience preferences, trends, and effective storytelling – all of which are integral to creating viral content. By integrating human insights into AI development, we can enhance AI’s ability to generate content that can effectively generate success.

The concept of “human-in-the-loop,” as discussed in the article, resonates deeply with my experiences and research findings. It reinforces the notion that while AI offers incredible potential for innovation, it must be guided and complemented by human creativity and judgment. In my opinion, this approach is the best way to ensure that AI develops safely and effectively. Moving forward, I will continue exploring AI’s capabilities, while reminding myself of the importance a human role plays within developing artificial intelligence.

As an artist, I’ve always aimed to perfect my craft, spending hours late into the night to illustrate my thoughts to the finest detail. When I finally finish my art piece, a rush of satisfaction fills me, telling me that all my hard work is worth it. This is one of the human elements of art that AI will never be able to replicate. To a certain extent, I agree with Professor Wu and Professor Mollick that people and AI can form an extremely strong team when working side-by-side. I’d even propose using AI to replace repetitive jobs such as financial accountants or bookkeepers, which allow people to let their creativity flourish and advance society as a whole. In the next generations, fewer people will pursue jobs taken by AI, instead seeking innovative tasks to conquer. AI’s ability to quickly analyze data, as shown in Wu’s example, can benefit researchers immensely. AI’s quick generation of essays and text, which only need to be slightly proofread by humans, is a soundless way of getting your point out faster and with minimal thinking so you can move on to your next big idea. However, purely objective, research-based data analysis should be the habitat where AI thrives, and nowhere else.

Recently, AI art has been spiraling out of control, abused for its ability to generate pictures and “artwork” in seconds. However, the art used to train these AIs usually comes from non-consenting artists, which forces many to go out of their way to try to avoid their art getting stolen from AI. Some decide to use disturbance filters– pictures with lots of random colors overlaid on their works, just to avoid their art getting stolen for training AI. In addition, countless people are wrongfully calling a piece of work AI. It pains me that artists need videos of their entire drawing process, called speed paints, and occasionally even with some small sketches on the side, just to prove that their art isn’t AI. As AI art becomes more prevalent, more and more social media companies are selling their data to train this software as well. As a result, artists are forced to flee sites such as Instagram and Facebook, after the news that Meta is using their art to train AI. Professor Mollick tells us “We are in Control,” but does this really sound like the control that we aspire to have?

Mollick’s fourth point is that AI is always growing and improving, a looming threat to the art community. Anti-AI filters now need to be more and more apparent as AI learns to overcome these obstacles. In the future, these filters may obscure the entire artwork and take away from the effect that the piece originally had. As AI learns to become more realistic and humanlike, even learning how to “draw as a person” to successfully pass speed paint interrogations, I start to wonder if it’s worth it to invest so much into making an AI pretend like it has emotions. Mollick’s first piece of advice is, “As long as you’re being ethical about it, you should use AI in everything you do.” AI art, on paper, isn’t unethical, as it’s just software analyzing a bunch of collected data. However, I argue that, with creators scrambling to find ways to prevent AI from stealing their work, and software creators doing it regardless, AI art is actually extremely unethical. Despite this, some people still see AI as a new technological development that will create riveting ways to express ourselves.

People naturally want to seek things they can relate to, which is why some are so off-put about AI. AI, as it is just software that complies its analysis into a single result, has no emotions. It can be programmed to say words or use objects that often appear when a certain emotion is portrayed, but it will never have experienced it for itself. As a person, my art is based on my personal feelings, or events in my life that impact me. Through my hard work and hours of dedication that goes into my craft, I’m able to fully portray the emotions that I feel. In addition, when my piece is able to resonate with hundreds of people, I feel understood and my view feels valued. This kind of joy can also be felt when looking at art as well. There’s an unexplainable ecstasy that comes from seeing a work of art you can truly relate to. However, there is no such feeling in the hollow, automatic, and mass-produced “art” that AI creates. Trying to relate to such pieces is even harder when you learn the artist behind it isn’t a person, or even a living being at all.

In conclusion, I agree that AI is a riveting innovation created to compliment people in their work, but only in the fields of cold, hard facts. As an artist, AI should feel like a tool that allows more people to explore their passions and interests, not one that mass-produces pictures for the sake of attention and money. When AI enthusiasts start to dip their feet into the pool of creativity, no matter how small, AI isn’t needed. In fact, it shouldn’t be used at all. Instead, AI should be used to fill mundane tasks and instead let people branch out more into their creative sides, allowing them to make more innovative and impactful decisions.

In the article “AI and You: Learn to Be the Human in the Loop”, Diana Drake poses a crucial direction for the future of artificial intelligence and man-kind. Instead of inducing a competitive nature between the two parties, Drake puts emphasis on our relationship as “Humans AND AI”. In the article, Wharton Professor Lynn Wu emphasizes human-AI collaboration, using DHL’s operations as a case study where human input is essential for handling “edge cases” that AI cannot manage alone. I believe that more of these cases should be developed as more sophisticated forms of artificial intelligence evolve. J.A.R.V.I.S., the artificial intelligent secretary customized for the busy lifestyle of both Ironman and Tony Stark, serves as a good starting point for future development in harmonious styled A.I. Like Jarvis, companies should position their development towards customizable artificial intelligence which are able to build bonds with their human owners. As depicted in the countless movies portraying Ironman, Tony Stark and Jarvis had developed a unique human-AI relationship, reminiscent of a human-human relationship.

The current conversation about AI and its impact on jobs often feels too cautious. We tend to see AI as just a tool to help us out, rather than recognizing the big changes it’s going to bring. The idea that “Humans AND AI” will work together smoothly overlooks the fact that AI isn’t just making our jobs easier—it’s changing the workforce in ways many people aren’t ready for. Thinking that AI will only handle the boring tasks is a bit naive; we need to understand that AI could replace entire job categories, even those we thought were safe from automation.

However, this does not mean we should be motionless in our pursuit of harmony with AI. It’s easy to recognize that AI will reshape the course of human history, but it’s much more difficult to visualize where we lie in the sea of uncertainties ahead of us. In order to keep our roles as members of society, we must change the ways that AI is being developed. Instead of actively creating AI that can solve all of our issues and needs, we must guide the development process towards creating AI that is supplemental to humans. Future AI’s should be developed while keeping humans in mind, remembering that at the end of the day, AI is simply a tool to assist us. Some might argue that AI’s ability to complete mundane tasks frees up the human mind and energy to focus on larger problems. However, there is a fine line between having AI complete the boring work and relying on AI for everything we don’t want to do. Continuing with this train of thought only leads humans to become lazier and rely on AI for everything.

As a society, our current approach to AI is bound to haunt us one day. However, by changing our pursuit and direction of intelligence, we very well may be able to create an AI that is not only beneficial to us, but still allows us to express the very things that make us human.

What does this mean for human capital development in developing nations where the focus on basics is very much a focus and labour intensive approaches are key to transformation of the overall economy.

I agree with Professor Mollick that AI is more about augmentation than replacing humans. As I work on my video game, I use it A LOT. I’ve found its best use cases are finding and organizing data, as well as improving code.

Recently, I gave it a prompt: “Integrate mobile controls that work like this into my character’s code in the most optimized way.” It worked on the first try. I knew I could do it myself without a problem, but why bother? It was done in under a minute, while I could have spent my time doing more creative and “human” tasks myself. After that, I only had to make minor adjustments, like setting the sensitivity. ChatGPT couldn’t fine-tune the sensitivity or other settings to feel right for players, but it was just a matter of tweaking a few numbers.

My point is that AI can boost our efficiency and help expand our work, not replace us. It can’t do everything from scratch by itself. We shouldn’t fight it. Instead, we should use it responsibly and automate as many boring tasks as possible.